The Digital Services (balancing) Act: protecting consumers while enabling businesses

On 15 December, the European Commission published its long-awaited proposals for a new Digital Services Act (‘DSA’) and Digital Markets Act (‘DMA’).1,2 These landmark pieces of legislation will have wide-reaching effects throughout Europe’s economy. Here in Today’s Agenda, we consider some of the implications of the DSA for online platforms and their business users.

The DSA updates the 20-year-old e-Commerce Directive (‘ECD’) and will set the rules governing online intermediaries in Europe.3 The new rules will have a direct impact on the online platform operators they apply to. However, the changes that those platforms implement in response to the rules will also have a knock-on effect on users, including both consumers and businesses across the EU.

Oxera recently published a study commissioned by Allied for Startups, which examined these potential knock-on effects.4 In this article, we draw on that study to reflect on the opportunities and challenges that the DSA proposals will present for online platforms and their users.

What is the DSA?

The rules set out in the DSA specify the liabilities and responsibilities of online intermediaries, with a focus on issues of safety and illegal content online. They aim to update, clarify and enhance the legal framework for online intermediaries set out in the ECD by:

- updating the responsibilities and obligations of online intermediaries to keep users safe from illegal content, goods and services;

- minimising the fragmentation of rules within the digital single market;

- complementing forthcoming, pre-existing, and sector-specific regulation (such as the EU’s new terrorist content Regulation, the GDPR, and the Copyright Directive).

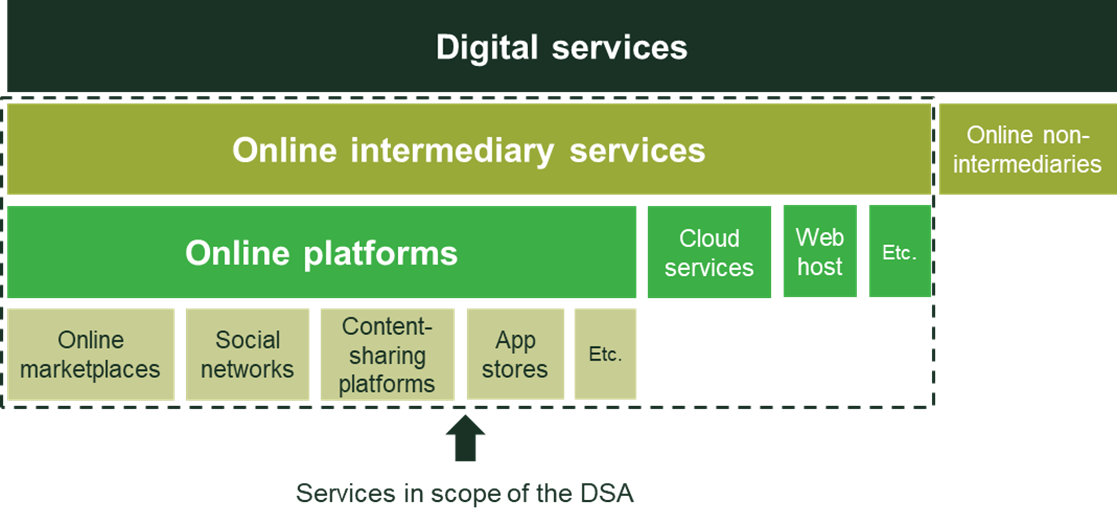

The DSA will apply to a wide range of online intermediaries, as outlined in the figure below.

Figure 1 Services in scope of the DSA

What do the proposals say?

Our research found that there is a strong appetite among both platforms and their users for increased trust and safety online. However, poorly designed rules could lead to unintended consequences, such as the removal of valued platform features, admin burdens for small businesses, or barriers to platform growth. We consider three key aspects of the DSA alongside the results of our study to assess the likely balance of these effects.

Content moderation and liability

Online platforms play an important role in monitoring and moderating content, which can help protect their users. One hotly debated topic has been the degree to which platforms should be liable for any third-party illegal content that is uploaded but not caught by automated filters.

An effective intermediary liability regime must balance the incentives for platforms to innovate and provide valued services to their users, with the need to enforce laws and protect consumers. Our research found that, faced with an uncapped liability, platforms could be forced to take actions to mitigate their legal risk that would erode value for their users. For example, they might:

- remove features and functionalities—for instance, review platforms might prohibit ‘free-text’ reviews and photos, which we found could make it more difficult for businesses to attract new customers;

- incorrectly take down legitimate content—overly cautious moderation processes may be adopted to mitigate the liability risk, which we found could reduce revenues for business users.

An integral part of the DSA proposals is the continuation of a limited liability regime for online intermediaries, which helps to protect innovation and avoids these negative consequences for users. Importantly, the DSA clarifies the type of intermediary services eligible for liability exemptions, and the conditions that must be met, providing platforms with greater certainty and legal clarity. ‘Hosting’ services, including online platforms, are exempt from liability if they do not have actual knowledge of the illegal content, and quickly remove or disable access to it once they become aware.

Online platforms have a strong natural incentive to invest in technologies and processes that protect users and increase the value of their online ecosystem. Therefore, the step taken in the DSA to remove disincentives for voluntary action is welcome. This will enable platforms to take proactive measures without fear of losing their liability protections, helping to tackle the spread of illegal content online.

At the same time, the DSA proposes a suite of procedural obligations—i.e. rules that must be followed with regards to processes and procures—for online platforms, including: notice-and-action mechanisms and working with trusted flaggers; safeguarding measures against misuse; and transparency and reporting obligations. These measures will help increase trust and safety online—our research found that businesses anticipate that this would lead to increased sales. The DSA also provides for internal complaint-handling systems and options for redress. This could prove valuable, given that our research found that businesses expected to lose revenue if their content was incorrectly removed.

Our research suggested procedural obligations with defined sanctions could enable these benefits, while still giving platforms the clarity they need to effectively manage their business. As regards possible penalty amounts, we note that while a maximum level has been set (at 6% of annual turnover) for infringements of the Regulation, the level of fines that different breaches attract will remain unclear until real-life cases come to light.

Importantly, the DSA proposals also leave the door open to platforms’ adoption of scalable, technology-led solutions—such as AI—to address the requirements. This is a welcome outcome, as our research found that prescriptive measures, such as zero-tolerance policies or requiring human oversight of every issue, could prevent platforms from innovating, limit growth, and even put certain digital business models at risk.

Thresholds based on a platform’s type and size

An important way in which online platforms create value is by bringing together two or more sides of a market to facilitate an exchange. Businesses and consumers benefit from positive network effects as the platform grows.

Network effects

Platform users can benefit from either direct or indirect network effects (or both), depending on the type of platform and what it is being used for.

Direct network effects mean that the value to users increases as more users join the same side of the platform (e.g. more of your friends join a social media service).

Indirect network effects mean that the value to users on one side of a platform increases as more users join the other side. This can be a two-way effect, as is the case for buyers and sellers using an online marketplace; or there can be a one-way effect, such as the benefit to advertisers if more people join a video-streaming platform.

Either way, the value of the platform ecosystem increases as the number of users grows.

The DSA applies different obligations to intermediaries based on the:

- type of service—hosting services and online platforms face additional obligations;

- enterprise size—micro and small enterprises are excluded from certain obligations;

- number of users—‘very large’ online platforms face additional obligations.

Applying risk-based measures based on the type of the service can help achieve online safety—online platforms are more exposed to illegal content and therefore face additional obligations to reflect this. Moreover, excluding micro and small enterprises from disproportionate obligations will help enable start-up and scale-up platforms to grow without facing regulatory burdens. Importantly, these thresholds are compatible with platforms’ incentive to grow and increase the value of their ecosystems.

Perhaps the most important regulatory threshold is the designation of online platforms with more than 45 million users as being ‘very large’. The Commission considers that such platforms play an important role due to their reach, and that additional obligations are necessary to address public policy concerns and the societal risks such platforms may pose.

These ‘very large’ online platforms will face obligations in addition to those described above, including, for example, risk assessments, mitigation measures, and further transparency and reporting obligations. This will create additional burdens and require greater engagement and transparency from these platforms.

This progressive approach might be thought to be a reasonable attempt at regulation that grows with the scale of the platform. However, this threshold could create a ‘cliff edge’ of regulatory compliance for ‘very large’ platforms, which could potentially disincentivise growth beyond this level—in deciding whether to grow its user base, a platform may trade off the marginal growth beyond this threshold, with the additional costs of compliance.

Know Your Business Customer

Requiring platforms to take steps to validate their business customers can help build integrity and trust in the digital ecosystem. Our research found that while this could benefit businesses (e.g. due to customers having more faith in their business and there being less competition from fake accounts), this needs to be balanced against the negative effects (e.g. increased admin costs and reduced multi-homing).

The DSA proposes Know Your Business Customer (‘KYBC’) obligations, which require online platforms to collect information on traders prior to their use of the service. Platforms should make ‘reasonable efforts’ to verify parts of this information using freely available online databases, or by requesting trustworthy documents from traders. This approach could help protect users and deliver benefits to business users while mitigating negative impacts of burdensome information and verification requirements.

The KYBC requirement is targeted at tracing sellers of illegal goods or services on online marketplaces.5 However, there is a degree of ambiguity relating to how this may apply to other types of platforms, such as gig-working platforms, through which gig workers provide their services to businesses and consumers, given the definitions of ‘consumers’ and ‘traders’. This may require further clarification.

Finding a balance?

Our study assessed the likely effect of three broad policy scenarios:

- increased liability—making platforms legally responsible for third-party content posted on their site;

- procedural obligations—setting out procedures for platforms to tackle illegal content, goods, and services, enforced with defined sanctions and harmonised across the EU;

- automatic filtering—enabling platforms to increase the amount of content monitoring they do, using technological solutions.

These resulted in substantially different knock-on effects for EU businesses using online platforms, with the ‘increased liability’ scenario being expected to reduce revenues (e.g. by up to 4.1% for small businesses), while the ‘procedural obligations’ and ‘automatic filtering’ scenarios are expected to increase revenues (e.g. by up to 4.4% for travel and tourism businesses).

The content moderation and liability proposals in the DSA proposals for online platforms are broadly in line with those tested under the ‘procedural obligations’ and ‘automatic filtering’ scenarios; while the choice of instrument and reassertion of the country-of-origin principle—complimented by increased coordination of national Digital Services Coordinators—will help increase regulatory consistency around the EU, promoting platform growth.

However, ‘very large’ platforms face a greater challenge, with significant additional obligations that are likely to increase administrative burdens and require more transparency and engagement with authorities and other parties. An important question that requires further consideration is whether this strikes the right balance between protecting users and enabling these platforms to innovate and provide valued services.

The publication of the Commission’s DSA proposals is a significant milestone in the European Digital Strategy and provides welcome clarity on the liabilities and responsibilities of online intermediaries. However, further debates are yet to take place and large hurdles remain, including scrutiny from the European Parliament and Council, before the DSA is adopted into law.

1 Proposal for a Regulation of the European Parliament and of the Council on a Single Market For Digital Services (Digital Services Act) and amending Directive 2000/31/EC, 15 December 2020.

2 Proposal for a Regulation of the European Parliament and of the Council on contestable and fair markets in the digital sector (Digital Markets Act), 15 December 2020.

3 Directive 2000/31/EC of the European Parliament and of the Council of 8 June 2000 on certain legal aspects of information society services, in particular electronic commerce, in the Internal Market (Directive on electronic commerce).

4 Oxera (2020), ‘The impact of the Digital Services Act on business users’, Study prepared for Allied for Startups, 23 October.

5 European Commission (2020), ‘Press release: Europe fit for the Digital Age: Commission proposes new rules for digital platforms’, 15 December.

Download

Related

Investing in distribution: ED3 and beyond

The National Infrastructure Commission (NIC) has published its vision for the UK’s electricity distribution network. Below, we review this in the context of Ofgem’s consultation on RIIO-ED31 and its published responses. One of the policy priorities is to ensure that the distribution network is strategically reinforced in preparation… Read More

Leveraged buyouts: a smart strategy or a risky gamble?

The second episode in the Top of the Agenda series on private equity demystifies leveraged buyouts (LBOs); a widely used yet controversial private equity strategy. While LBOs can offer the potential for substantial returns by using debt to finance acquisitions, they also come with significant risks such as excessive debt… Read More